EVPN (RFC 7432) Explained

EVPN or Ethernet VPN is a new standard that has finally been given an RFC number. Many vendors are already working on implementing this standard since the early draft versions and even before that Juniper already used the same technology in it’s Qfabric product. RFC 7432 was previously known as: draft-ietf-l2vpn-evpn.

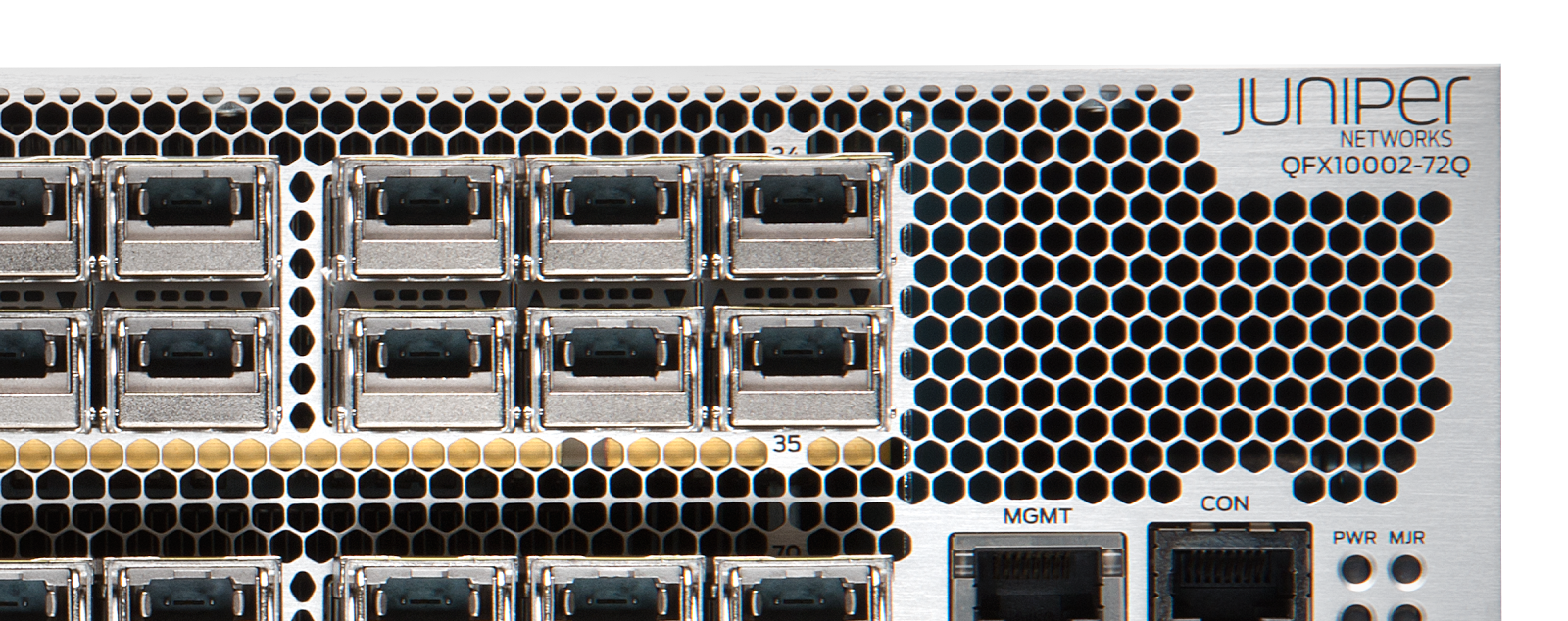

EVPN is initially targeted as Data Center Interconnect technology, but is now deployed within Data Center Fabric networks as well to use within a DC. In this blog I will explain why to use it, how the features work and finally which Juniper products support it

EVPN or Ethernet VPN is a new standard that has finally been given an RFC number. Many vendors are already working on implementing this standard since the early draft versions and even before that Juniper already used the same technology in it’s Qfabric product. RFC 7432 was previously known as: draft-ietf-l2vpn-evpn.

EVPN is initially targeted as Data Center Interconnect technology, but is now deployed within Data Center Fabric networks as well to use within a DC. In this blog I will explain why to use it, how the features work and finally which Juniper products support it